HPE StoreVirtual VSA Software & Components

HPE StoreVirtual VSA Software transforms your server’s internal or direct-attached storage into a fully-featured shared storage array without the cost and complexity associated with dedicated storage. StoreVirtual VSA is a virtual storage appliance optimized for VMware vSphere. StoreVirtual VSA creates a virtual array within your application server and scales as storage needs evolve, delivering a comprehensive enterprise-class feature set that can be managed by an IT generalist. The ability to use internal storage within your environment greatly increases storage utilization. The unique scale-out architecture offers the ability to add storage capacity on-the-fly without compromising performance. Its built-in high availability and disaster recovery features ensure business continuity for the entire virtual environment.

Simple and Flexible to Manage

Enjoy all the benefits of traditional SAN storage without a dedicated storage device. HPE StoreVirtual VSA Software allows you to build enterprise level, highly available, shared storage functionality into your server infrastructure to deliver lower cost of ownership and superior ease of management.

All StoreVirtual VSA nodes in your environment, onsite or across multiple sites, can be managed from the Centralized Management Console (CMC). The CMC features a simple, built-in best practice analyzer and easy-to-use update process. Add more internal storage capacity to the cluster by simply adding servers with StoreVirtual VSA installed. No external storage device required: Create shared storage out of internal or external disk capacity (DAS, SAN); (FC or iSCSI).

Snapshots provide instant, point-in-time volume copies that are readable, writeable, and mountable for use by applications and backup software. Avoid data loss of any single component in a storage node with StoreVirtual’s multi-fault protection. Remote Copy enables centralized backup and disaster recovery on a per-volume basis and leverages application integrated snapshots for faster recovery.

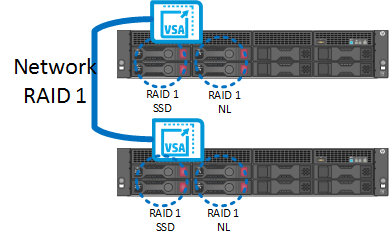

Network RAID stripes and protects multiple copies of data across a cluster of storage nodes, eliminating any single point of failure in the StoreVirtual array. Applications have continuous data availability in the event of a disk, controller, storage node, power, network, or site failure. Create availability zones within your environment across racks, rooms, buildings, and cities and provide seamless application high availability with transparent failover and failback across zones—automatically.

Increase Scalability and Storage Efficiency

Increase Scalability and Storage Efficiency

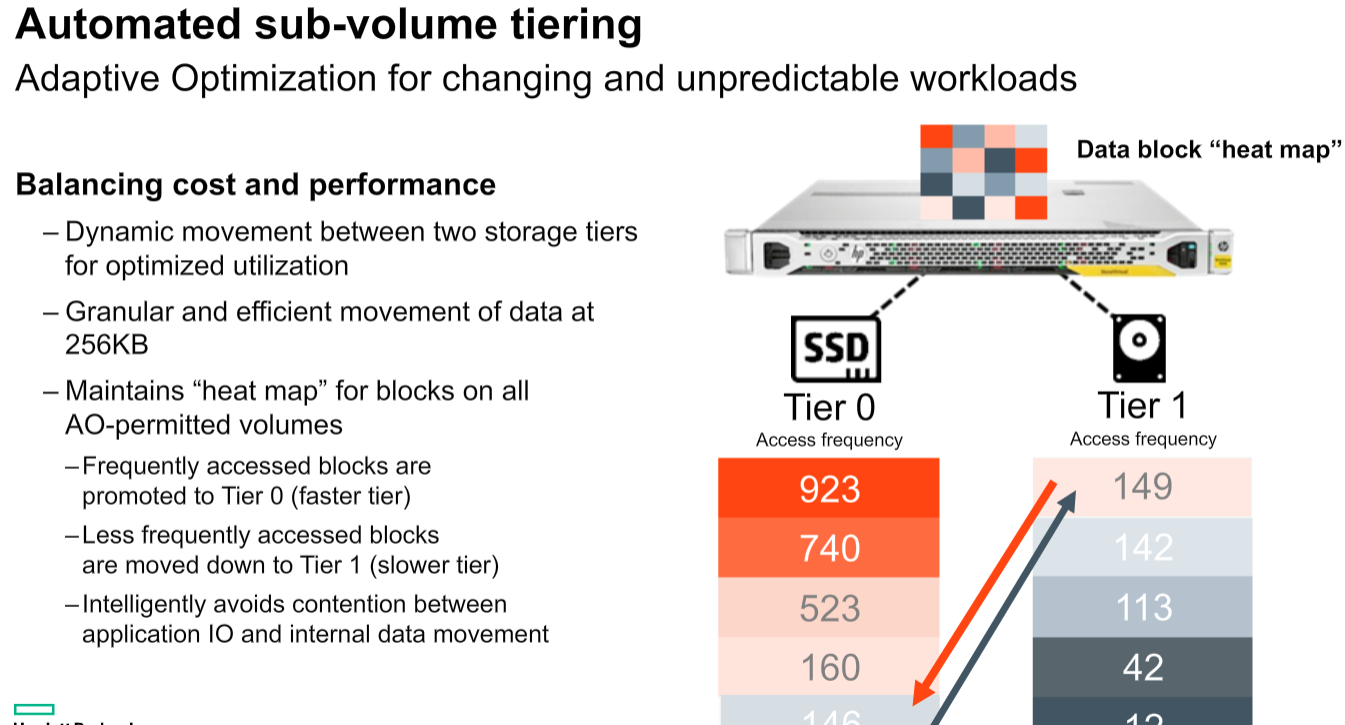

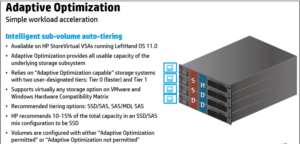

Use StoreVirtual VSA with solid-state drives (SSDs) to provide a high-performance storage solution in your environment. Create an all-flash tier for maximum performance or use a lower cost alternative with StoreVirtual Adaptive Optimization to create automated tiers with an optimized amount of SSDs. Maximize storage utilization by deploying high-performance arrays at the main site and cost-effective appliances at remote sites. Use management plug-ins for VMware vCenter. Lower storage costs and achieve high availability with as few as two nodes that easily scale from within Scale-out storage architecture allows the consolidation of internal and external disks into a pool of shared storage. All available capacity and performance is aggregated and accessible to every volume in the cluster. As storage needs grow, the cluster scales out linearly while remaining online.

Components

Built on an open-standards platform, StoreVirtual VSA software can run on any modern x86-based hardware in VMware vSphere, Microsoft Hyper-V, and Linux KVM hypervisors.

StoreVirtual technology components include targets (storage systems and storage clusters) in a networked infrastructure. Servers and virtual machines act as initiators with access to the shared storage. The LeftHand OS leverages industry-standard iSCSI protocol over Ethernet to provide block-based storage to application servers on the network. And for high availability it uses fail over manager or Quorum Disk’s.

HPE Store Virtual Software

HP StoreVirtual VSA is a proper virtual storage appliance (VSA) suppor

ted in production environments providing block-based storage via iSCSI.

A VSA is a virtual appliance deployed in a VMware environment which aggregates and abstracts physical underlying storage in a common storage pool which will be presented to the hypervisor and can be used to store virtual machine disks and related files.

StoreVirtual VSA can use both existing VMFS datastores and RDM (raw LUNs) to store data and it can be configured to support sub-volume tiering to move data chunks across tiers. StoreVirtual VSA as the “physical” HP StoreVirtual counterpart is a scale-out solution, this means that if you need to increase storage capacity, resilience or performance other StoreVirtual VSA nodes (i.e. virtual appliances) can be deployed.

Storage System or Storage Node

A storage node is a server that virtualizes its direct-attached storage. In the case of StoreVirtual nodes, each one includes controller functionality—no external controllers needed. Storage nodes require minimal user configuration to bring them online as available systems within the Centralized Management Console (CMC).

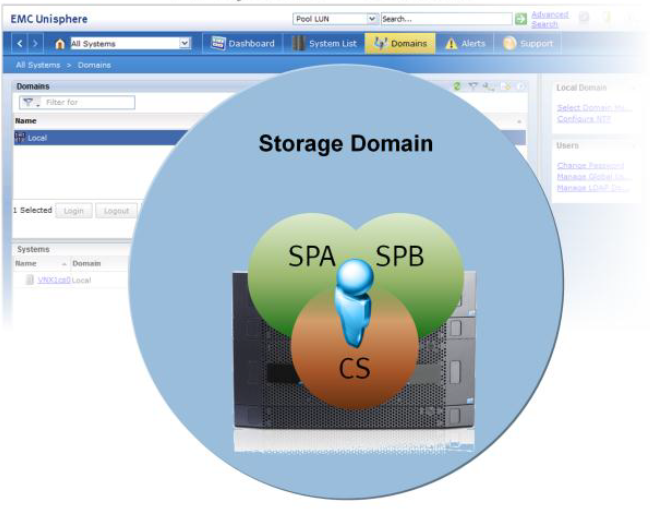

StoreVirtual Cluster

Multiple StoreVirtual VSAs running on multiple servers create a scalable pool of storage with the ability to make data highly available. We can aggregate two or more storage nodes into a flexible pool of storage, called a storage cluster.

Multiple clusters can be aggregated together into management groups. Volumes, clusters and management groups within the shared storage architecture can be managed centrally through a single console.

Management groups

A Management Group is a logical container which allow the management of one or more HP StoreVirtual VSAs, Clusters and Volumes. Management group will have credentials set on the configuration time .And these credentials will be used for any management task of any HP StoreVirtual belonging to this specific Management Group.

FOM & Quorum Disk

The Failover Manager (FOM) is designed to provide automated and transparent failover capability. For fault tolerance in a single-site configuration, the FOM runs as a virtual appliance in either a VMware vSphere, Microsoft Hyper-V Server, or Linux KVM environment, and must be installed on storage that is not provided by

the StoreVirtual installation it is protecting.

The FOM participates in the management group as a manager; however, it performs quorum operations only, it does not perform data movement operations. It is especially useful in a multi-site stretch cluster to manage quorum for the multi-site configuration without requiring additional storage systems to act as managers in the sites. For each management group, the StoreVirtual Management Group Wizard will set up at least three management devices at each site. FOM manages latency and bandwidth across these devices, continually checking for data availability by comparing one online node against another.

If a node should fail, FOM will discover a discrepancy between the two online nodes and the one offline node – at which point it will notify the administrator. This process requires at least three devices, with at least two devices active and aware at any given time to check the system for reasonableness: if one node fails, and a second node remains online, the FOM will rely on the third node to maintain quorum, acting as a “witness” to attest that the second node is a reliable source for data.

In smaller environments with only two storage nodes and no third device available to provide quorum, we can implement with any one of the below two option.

- Supply a third node onsite with StoreVirtual VSA installed and use FOM to maintain quorum

- Set up 2-Node Quorum on a shared disk, using LeftHand OS 12.5 or later versions

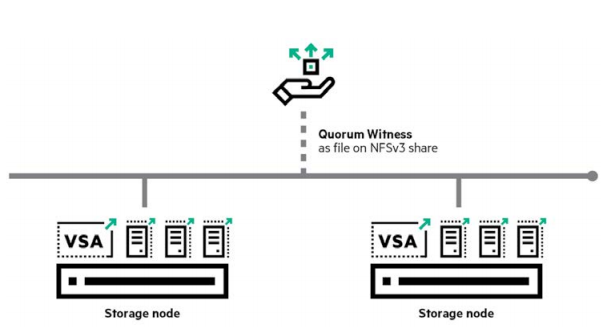

StoreVirtual 2-Node Quorum is a mechanism developed to ensure high availability and transparent failover between 2-node management groups in any number of satellite sites, such as remote offices or retail stores. A cost-effective, low-bandwidth alternative to the FOM, the feature does not require a virtual machine in that site, relying instead on a centralized Quorum Witness in the form of an NFSv3 file share as the tie-breaker between two storage nodes as shown below .

Quorum Witness uses a shared disk to determine which of the two nodes should be considered a reliable resource in the event of a failure. The shared disk is an NFS share that both nodes in the management group can access.

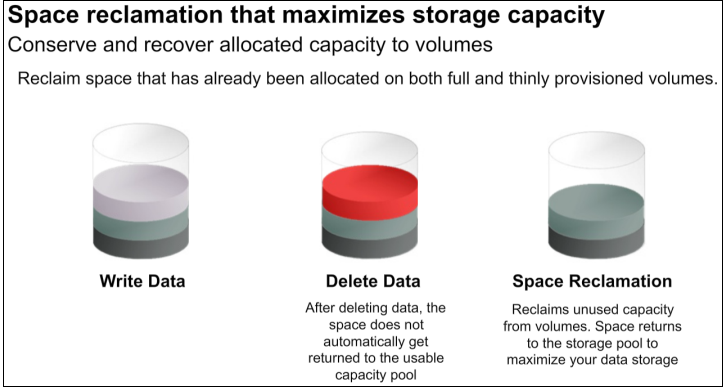

Volume

It is basically a shared volume crated with help of Network Raid protection. Network RAID (so the data protection level) can be set per volume. So multiple volumes can co-exist on a StoreVirtual cluster with different Network RAID levels. This Volume can be accessed through iSCSI protocol.

Network RAID protection which basically spreads data between different VSAs as the common RAID spreads data across different physical disks within the same array.

CMC (HP StoreVirtual Centralized Management Console)

All StoreVirtual VSA nodes in your environment, onsite or across multiple sites, can be managed from the Centralized Management Console (CMC). The CMC features a simple, built-in best practice analyzer and easy-to-use update process

You must select the Storage that is not provided by the Storevirtual installation it is protecting.

You must select the Storage that is not provided by the Storevirtual installation it is protecting.

Note: – There will be a pop-up message for before deployment as shown in the below image

Note: – There will be a pop-up message for before deployment as shown in the below image